How do search engines use sitemaps?

At Edge45, we’ve had to deal with sitemap of all sizes and complexity for our clients. So, we thought we’d pass on our knowledge of sitemaps, how they are searched and what is their use. But let’s start at the start…

How search engines crawl and index

Before we get into sitemaps themselves, it’s important to understand from an SEO point of view how search engines crawl and index your website.

Search engine crawling is the process in which search engines send out their crawlers (called ‘bots’) to follow links throughout the web to find new or updated content.

Search engines then store and organise the content found during the crawling process. If they deem the content to be valuable, it will be indexed. This is highly important as once a page is indexed it can start to rank for relevant search terms to help improve a user’s experience for those given queries.

What are sitemaps?

A sitemap is a file that provides search engines with information about assets such as the webpages on your website. It’s all in the name really – a map of your site provided by you to help Google find its way to the good stuff.

Search engines will read this file and follow the links included to crawl your site more efficiently.

Your sitemap should include key pages that you think are important and want search engines to consider for indexing and ranking purposes.

The sitemaps are usually XML sitemaps – or Extensible Markup Language sitemaps. These include all the extra data Google needs to priorities the pages in your website when it crawls.

An HTML sitemap is simply a hypertext list of the pages you’re your website in the form of a directory or table of contents. These are much more useful for users to navigate.

Important types of sitemap

- XML Sitemap Index: A sitemap index file is a file containing a list of multiple individual XML sitemap files. For example, a large website might have individual XML sitemaps to list their Category pages, Product pages, Blog posts etc. The sitemap index will list these three individual XML sitemaps for search engines to discover.

- Static & Dynamic XML Sitemap: Using crawling tools, it’s easy to create a static XML sitemap. The problem is that a static XML sitemap becomes out of date as soon as your website changes and pages are added/removed. Dynamic XML sitemaps automatically update to reflect website changes as they occur and are therefore preferred to static XML sitemaps.

- HTML Sitemap: A HTML sitemap is there to assist users navigating the website but if you have a logical website navigation and hierarchy you shouldn’t need to rely on a HTML sitemap for users to navigate your site.

The importance of an XML sitemap

Search engines should be able to find all your key pages as they crawl your website following the internal link structure. For small, simple websites an XML sitemap isn’t needed.

The larger, more complex a website is, the more search engines will rely on XML sitemaps to find new or updated content.

Either way, we would still recommend setting up an XML sitemap as they will help search engines find important new or updated content and index the changes quicker. Google also states that in most cases, your site will benefit from having a sitemap.

- Google: “If your site’s pages are properly linked, Google can usually discover most of your site. Proper linking means that all pages that you deem important can be reached through some form of navigation, be that your site’s menu or links that you placed on pages. Even so, a sitemap can improve the crawling of larger or more complex sites, or more specialized files. However, in most cases, your site will benefit from having a sitemap.”

- Bing: “Sitemaps are an excellent way to tell Bing about URLs on your site that would be otherwise hard to discover by our web crawlers.”

XML sitemap best practice checklist

- Your sitemap file must be UTF-8 encoded

- Be sure to specify an accurate URL and last modified date

Google: “Google uses the <lastmod> value if it’s consistently and verifiably (for example by comparing to the last modification of the page) accurate.”

Bing: “We are revamping our crawl scheduling stack to better utilize the information provided by the “lastmod” tag in sitemaps. This will enhance our crawl efficiency by reducing unnecessary crawling of unchanged content and prioritizing recently updated content.”

For full details on the XML format please visit: https://www.sitemaps.org/protocol.html

- Reference your XML sitemap in your robots.txt to help search engines discover it

Add the following line to your robots.txt: Sitemap: https://www.yourdomain.com/sitemap.xml

- Adhere to size limits: 50MB (uncompressed) or 50,000 URLs

- Only include important pages you want indexed. Be sure the remove:

- URLs that redirect (3xx), are broken/missing (4xx) and server errors (5xx)

- Paginated pages

- Non-canonical pages

- Pages blocked by robots.txt

- Parameter URLs

- Pages with a noindex tag

- Submit your XML sitemap via Google Search Console and Bing Webmaster Tools

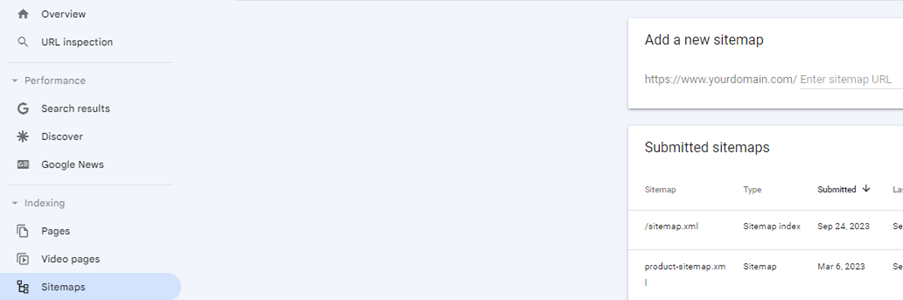

In Google Search Console, select “Sitemaps” on the left-hand navigation and then enter the URL of your XML sitemaps and hit the “Submit” button.

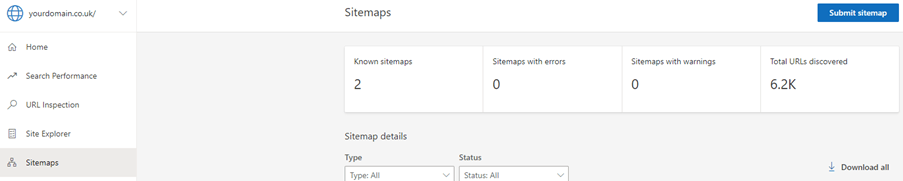

In Bing Webmaster Tools, select “Sitemaps” in the left-hand navigation and click “Submit sitemap” and enter your XML sitemap URL and hit the “Submit” button.

- Use sitemap reporting to help find and then fix errors

You can now review page indexing reports for the submitted pages included in your sitemaps. You will be provided with information on which are already indexed and which aren’t and the reasons why pages are not indexed.

Do you really need a sitemap?

Yes. While search engines can find and index all your pages without a sitemap, an optimised XML sitemap helps indicate which pages you think are quality pages and want indexing. Creating and submitting an XML sitemap will therefore improve your chances of getting new/updated pages indexed quicker.